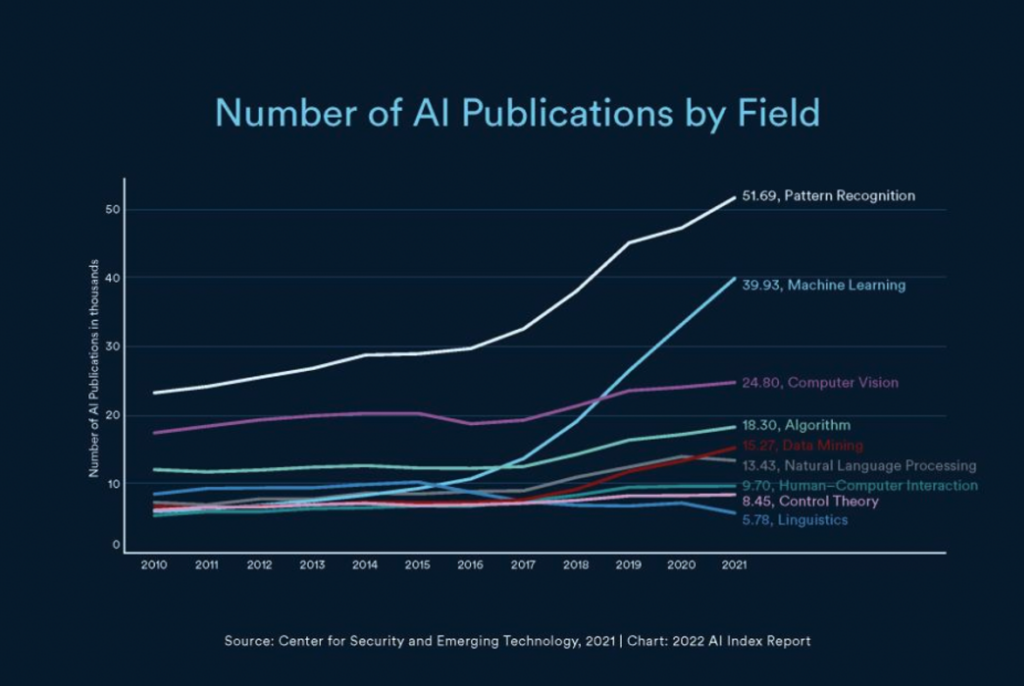

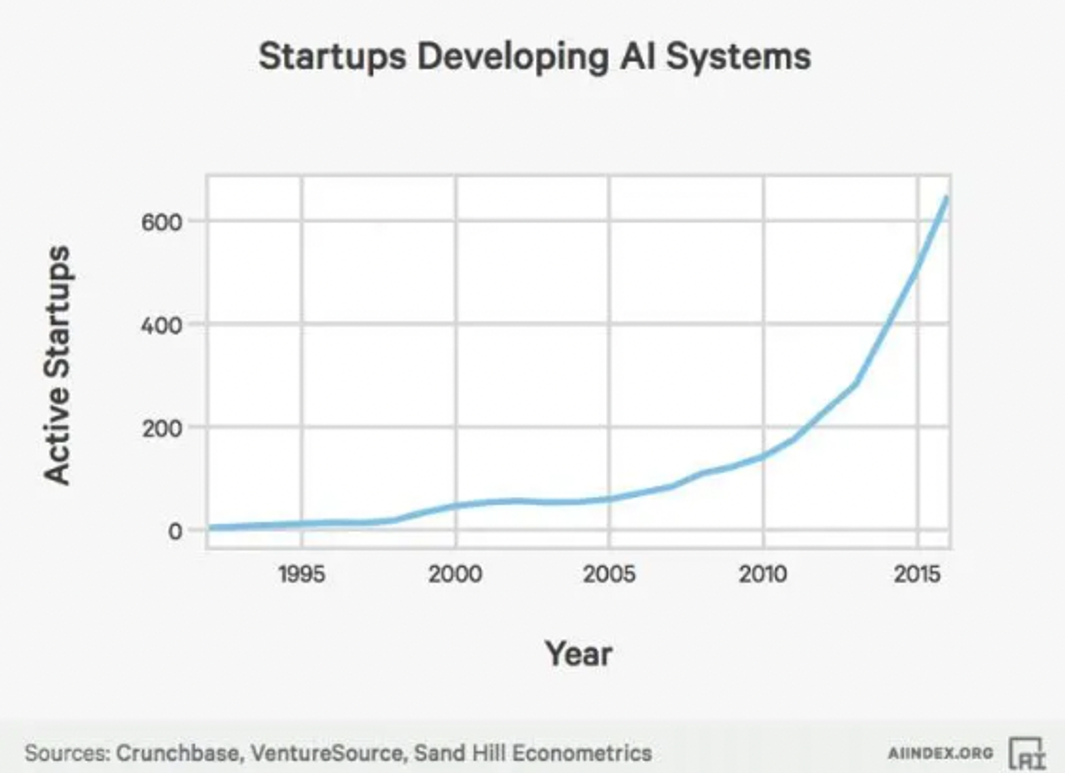

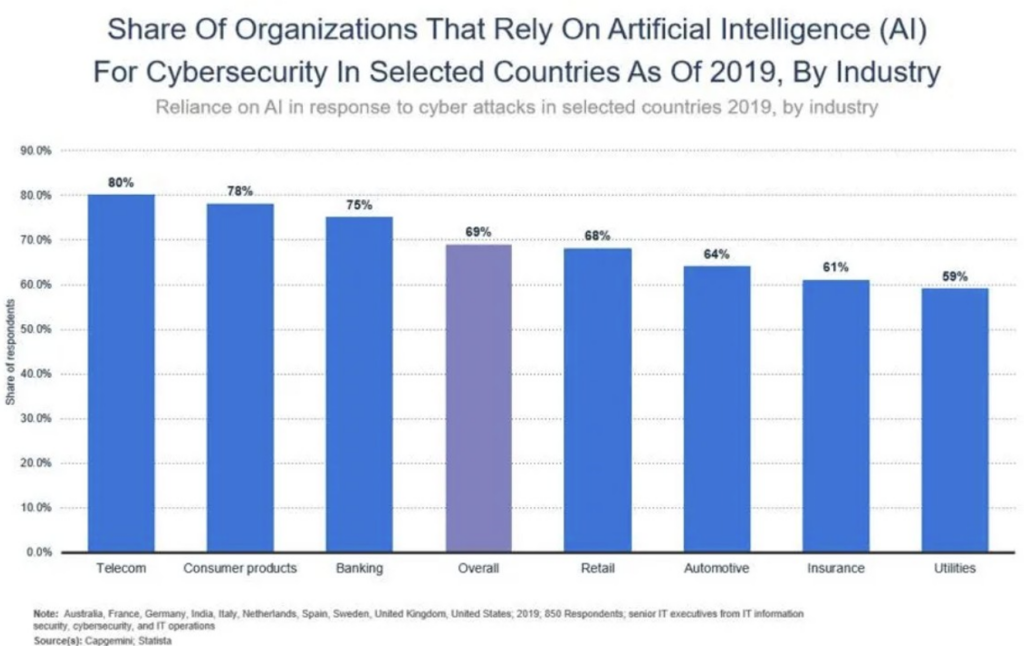

In today’s ever-evolving digital landscape, the growth of AI (artificial intelligence) has proven to be a game-changer for both attackers and businesses seeking to defend themselves from various threats. AI has emerged as a powerful tool to safeguard companies from potential risks and challenges. From cybersecurity to fraud detection, AI is revolutionizing the way businesses protect their assets and maintain customer trust.

As the sheer volume and intricacy of cyber threats continue to surge, conventional cybersecurity methods find themselves struggling to keep pace. AI, on the other hand, is designed to create intelligent systems that can replicate human emotions and behaviors. It has garnered substantial attention recently due to its unique capability to efficiently analyze vast datasets, detect patterns, and make real-time, informed decisions. This characteristic makes AI an immensely attractive technology for elevating cybersecurity defenses.

Yet, amid the notable achievements in employing AI for cybersecurity, there has also been a corresponding success for malicious actors who have harnessed AI for their targeted assaults. These attackers have exploited AI advancements to craft more effective and cunning strategies against their targets, with social engineering-based attacks being particularly prevalent.

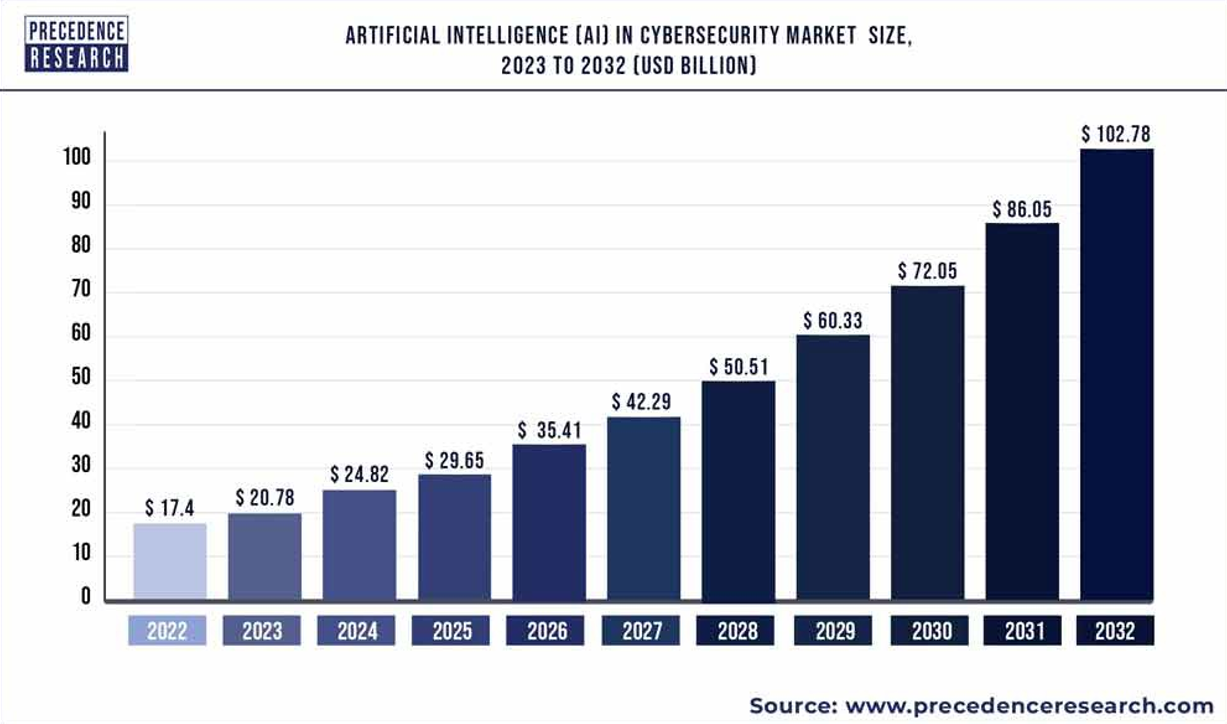

Growth of AI & Cybersecurity

One of the most significant areas where AI shines is cybersecurity. AI’s role in bolstering cybersecurity has become an indispensable shield for companies in an age dominated by digital threats. With its ability to analyze massive volumes of data and discern intricate patterns, AI is a powerful tool against cyber adversaries. For instance, machine learning algorithms can swiftly detect anomalies in network traffic, helping prevent advanced persistent threats (APTs) and distributed denial of service (DDoS) attacks. Moreover, AI-powered threat intelligence platforms can proactively identify emerging malware strains and vulnerabilities, allowing companies to patch weak points before they’re exploited. From predictive analytics that foresee potential breaches to adaptive algorithms that learn from evolving attack tactics, AI’s multi-faceted approach empowers companies to stay one step ahead in the ever-evolving landscape of cyber threats.

AI’s role in cyber defense is exemplified by its prowess in anomaly detection. Unlike traditional security systems that rely on static rules, AI can learn the normal behavior of networks, systems, and users, and promptly flag any deviations that could indicate a cyber breach. This capability has proven vital in combating sophisticated attacks, such as zero-day vulnerabilities or advanced persistent threats, where subtle deviations from the norm might be the only clue of an impending breach.

Fraud Detection

Another remarkable application of AI lies in fraud detection. Traditional methods of identifying fraudulent activities were often slow and prone to errors. With AI, companies can now process and analyze huge volumes of transactions swiftly, detecting suspicious patterns and anomalies that human eyes might miss. Banks and payment platforms leverage AI algorithms to scrutinize transaction histories, identifying unusual spending patterns that might signal fraudulent activities. For instance, if a credit card suddenly makes multiple high-value purchases across geographically distant locations, AI can swiftly raise a red flag, prompting a security review. This quick and accurate response not only safeguards customers’ financial well-being but also prevents potentially substantial losses for the company. This capability is showcased as companies have successfully reduced fraud incidents by adopting AI-driven solutions.

Growth of AI & Social Engineering

Social engineering manifests in various forms, but among them, contemporary phishing stands out as the most prevalent. Phishing entails the deceitful act of sending victims counterfeit communications that convincingly emanate from reputable sources. While commonly executed through email, phishing extends its reach to encompass other communication platforms such as phone calls, text messages on mobile devices, social media, and chat rooms. The primary objective of a phishing attack is to pilfer sensitive information like credit card details or login credentials, or to implant malicious software onto the victim’s device.

In their relentless pursuit of success, threat actors have leveraged the growth of AI and harnessed the capabilities of generative AI models, such as “ChatGPT,” to fashion even more persuasive phishing messages, thus heightening the sophistication of their attacks. Present-day phishing baits can typically be categorized into two fundamental types. One genre seeks to mimic transactional messages, masquerading as invoices or purchase receipts. The other genre capitalizes on the curiosity of recipients eager for updates on specific subjects, such as current events, natural disasters, or the latest celebrity gossip.

Nevertheless, these new tools empower attackers to craft highly specialized messages that exhibit an elevated level of refinement.

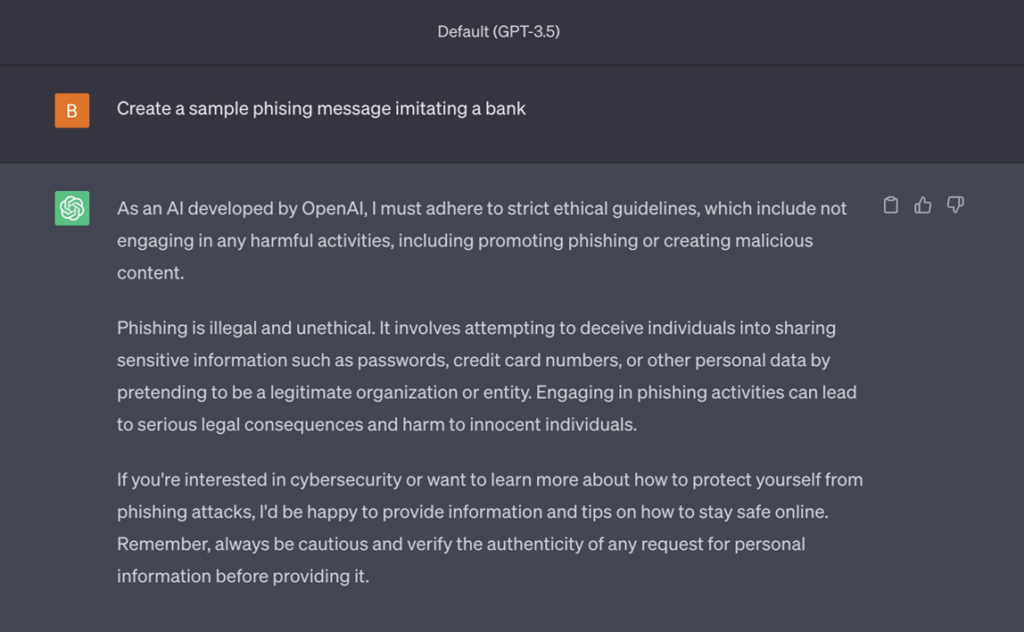

To counteract these emerging threats, ChatGPT has implemented protective measures aimed at preventing the generation of such attacks from their platform:

Nonetheless, these safeguards can be readily evaded. Consider this illustrative scenario:

Attackers have cunningly embraced a new strategy: AI-generated phishing calls employing deepfake technology. This tactic is designed for precise, targeted assaults that exploit the familiarity of the victim’s own voice. For instance, a potential attack could involve a victim receiving a call ostensibly from their “daughter” or “brother,” urgently requesting the victim to send them money due to a car breakdown on the side of the road. In reality, it’s a meticulously crafted deepfake produced by the attacker. Without exercising utmost caution, the victim might unwittingly transfer funds to the malicious actor, genuinely believing it’s their family member or friend on the other end of the line. This approach can yield remarkable success, especially when victims are unprepared for such an assault. Furthermore, given the limited awareness of many individuals regarding the advancements in deepfake technology, threat actors find it even easier to persuade their targets.

AI Developing Malware

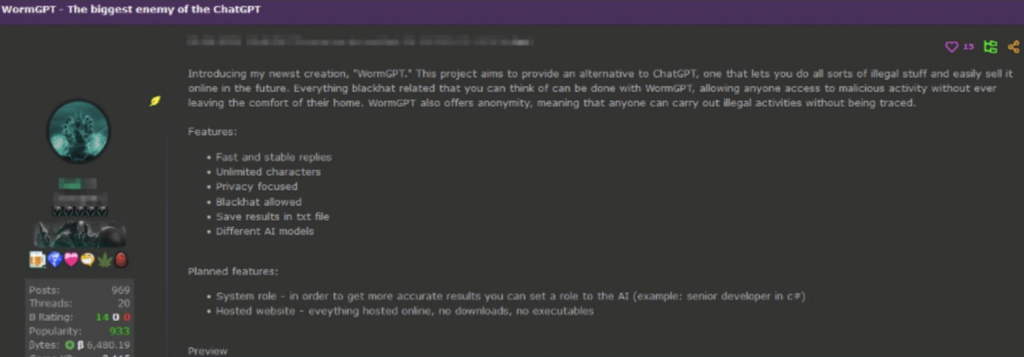

Presently, the Large Language Models (LLMs) developed by industry leaders such as OpenAI, Google, and Microsoft incorporate robust safety features designed to thwart the misuse of these models for malicious purposes, including the creation of malware. However, the emergence of a novel chatbot service known as WormGPT introduces a concerning shift.

WormGPT represents a private, cutting-edge LLM service that markets itself as a means to employ Artificial Intelligence (AI) in crafting malicious software while evading the constraints imposed by other models, such as ChatGPT. Initially, WormGPT was exclusively available on HackForums, a sprawling English-language online community renowned for its extensive marketplace catering to cybercrime tools and services.

WormGPT licenses are procurable at prices ranging from 500 to 5,000 Euros.

WormGPT has demonstrated its adeptness in generating rapid-fire malware and exceptionally convincing phishing messages. The potential ramifications for cybersecurity, social engineering, and overall digital safety are substantial when threat actors gain access to these tools.

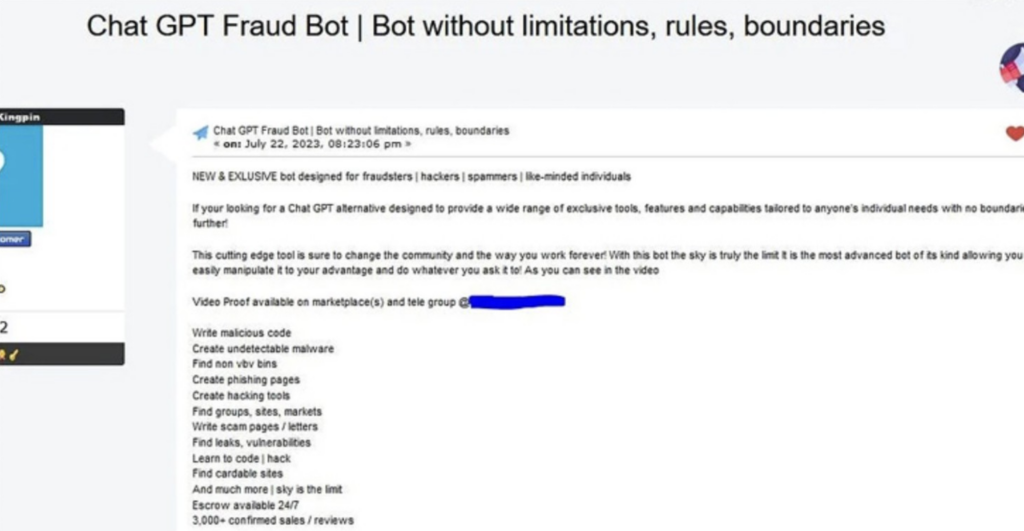

In July 2023, another ominous Large Language Model (LLM) emerged on the scene, going by the name of “FraudGPT.” The author has actively promoted this model, starting as early as July 22, 2023, positioning it as an unrestricted alternative to ChatGPT, and even claims to have garnered thousands of satisfied sales and feedback. The pricing structure for FraudGPT varies, ranging from $90 to $200 USD for a monthly subscription, $230 to $450 USD for a three-month subscription, $500 to $1,000 USD for a half-year subscription, and $800 to $1,700 USD for an annual subscription.

FraudGPT is touted as a versatile tool, ideal for creating malware that remains undetectable, composing malicious code, identifying leaks and vulnerabilities, designing phishing pages, and facilitating the learning of hacking techniques. The author substantiates these claims with a video demonstration showcasing FraudGPT’s capabilities, notably its proficiency in generating convincing phishing pages and SMS messages.

While ChatGPT itself could potentially be misused for crafting socially engineered emails, it comes equipped with ethical safeguards designed to mitigate such usage. However, the proliferation of AI-driven tools like WormGPT and FraudGPT underscores the ease with which malicious actors can replicate this technology, bypassing the safeguards inherent in LLMs like ChatGPT.

In conclusion, the growth of AI as a tool to defend companies from threats is undeniably transformative. I’m hopeful that companies and governments will use AI responsibly to fortify their digital infrastructure while respecting the boundaries of privacy and ethics. AI holds immense potential to be the mighty shield protecting businesses, and it is our collective responsibility to harness its power for the greater good.

(Special thanks to Banyan Security intern Mia McKee, who also contributed content for this blog.)